It is an electronic musical instrument industry specification that enables a wide variety of digital musical instruments, computers and other related devices to connect and communicate with one another. It is a set of standard commands that allows electronic musical instruments, performance controllers, computers and related devices to communicate, as well as a hardware standard that guarantees compatibility between them. MIDI equipment captures note events and adjustments to controls such as knobs and buttons, encodes them as digital messages, and sends these messages to other devices where they control sound generation and other features.

This data can be recorded into a hardware or software device called a sequencer, which can be used to edit the data and to play it back at a later time. MIDI carries note event messages that specify notation, pitch and velocity, control signals for parameters such as volume, vibrato, audio panning and cues, and clock signals that set and synchronize tempo between multiple devices. A single MIDI link can carry up to sixteen channels of information, each of which can be routed to a separate device. The 1983 introduction of the MIDI protocol revolutionized the music industry.

MIDI technology was standardized by a panel of music industry representatives, and is maintained by the MIDI Manufacturers Association (MMA). All official MIDI standards are jointly developed and published by the MMA in Los Angeles, California, USA, and for Japan, the MIDI Committee of the Association of Musical Electronics Industry (AMEI) in Tokyo.

Wave – table files provide an accurate rendering of real instrument sounds but are quite large. For simple music, we might be satisfied with FM synthesis versions of audio signals that could easily be generated by a sound card. A sound card is added to a PC expansion board and is capable of manipulating and outputting sounds through speakers connected to the board, recording sound input from a microphone connected to the computer, and manipulating sound stored on a disk.

If we are willing to be satisfied with the sound card’s defaults for many of the sounds we wish to include in a multimedia project, we can use a simple scripting language and hardware setup called MIDI.

MIDI Overview

MIDI, which dates from the early 1980s, is an acronym that stands for Musical Instrument Digital Interface. It forms a protocol adopted by the electronic music industry that enables computers, synthesizers, keyboards, and other musical devices to communicate with each other. A synthesizer produces synthetic music and is included on sound cards, using one of the two methods discussed above.

The MIDI standard is supported by most synthesizers, so sounds created on one can be played and manipulated on another and sound reasonably close. Computers must have a special MIDI interface, but this is incorporated into most sound cards. The sound card must also have both DA and AD converters.

MIDI is a scripting language — it codes “events” that stand for the production of certain sounds. Therefore, MIDI files are generally very small. For example, a MIDI event might include values for the pitch of a single note, its duration, and its volume.

Terminology. A synthesizer was, and still may be, a stand – alone sound generator that can vary pitch, loudness, and tone color. (The pitch is the musical note the instrument plays — a C, as opposed to a G, say.) It can also change additional music characteristics, such as attack and delay time. A good (musician’s) synthesizer often has a microprocessor, keyboard, control panels, memory, and so on. However, inexpensive synthesizers are now included on PC sound cards. Units that generate sound are referred to as tone modules or sound modules.

A sequencer started off as a special hardware device for storing and editing a sequence of musical events, in the form of MIDI data. Now it is more often a software music editor on the computer.

A MIDI keyboard produces no sound, instead generating sequences of MIDI instructions, called MIDI messages. These are rather like assembler code and usually consist of just a few bytes. You might have 3 minutes of music, say, stored in only 3 kB. In comparison, a wave – table file (WAV) stores 1 minute of music in about 10 MB. In MIDI parlance, the keyboard is referred to as a keyboard controller.

MIDI Concepts. Music is organized into tracks in a sequencer. Each track can be turned on or off on recording or playing back. Usually, a particular instrument is associated with a MIDI channel. MIDI channels are used to separate messages. There are 16 channels, numbered from 0 to 15. The channel forms the last four bits (the least significant bits) of the message. The idea is that each channel is associated with a particular instrument — for example, channel 1 is the piano, channel 10 is the drums. Nevertheless, you can switch instruments midstream, if desired, and associate another instrument with any channel.

The channel can also be used as a placeholder in a message. If the first four bits are all ones, the message is interpreted as a system common message.

Along with channel messages (which include a channel number), several other types of messages are sent, such as a general message for all instruments indicating a change in tuning or timing; these are called system messages. It is also possible to send a special message to an instrument’s channel that allows sending many notes without a channel specified. We will describe these messages in detail later.

The way a synthetic musical instrument responds to a MIDI message is usually by simply ignoring any “play sound” message that is not for its channel. If several messages are for its channel, say several simultaneous notes being played on a piano, then the instrument responds, provided it is multi – voice — that is, can play more than a single note at once.

It is easy to confuse the term voice with the term timbre. The latter is MIDI terminology for just what instrument we are trying to emulate — for example, a piano as opposed to a violin. It is the quality of the sound. An instrument (or sound card) that is multi – timbral is capable of playing many different sounds at the same time, (e.g., piano, brass, drums)

On the other hand, the term “voice”, while sometimes used by musicians to mean the same thing as timbre, is used in MIDI to mean every different timbre and pitch that the tone module can produce at the same time. Synthesizers can have many (typically 16, 32, 64, 256, etc.) voices. Each voice works independently and simultaneously to produce sounds of different timbre and pitch.

The term polyphony refers to the number of voices that can be produced at the same time. So a typical tone module may be able to produce “64 voices of polyphony” (64 different notes at once) and be “16 – part multi – timbral” (can produce sounds like 16 different instruments at once).

How different timbres are produced digitally is by using a patch, which is the set of control settings that define a particular timbre. Patches are often organized into databases, called banks. For true aficionados, software patch editors are available.

A standard mapping specifying just what instruments (patches) will be associated with what channels have been agreed on and is called General MIDI. In General MIDI, there are 128 patches are associated with standard instruments, and channel 10 is reserved for percussion instruments.

For most instruments, a typical message might be Note On (meaning, e.g., a keypress), consisting of what channel, what pitch, and what velocity (i.e., volume). For percussion instruments, the pitch data means which kind of drum. A Note On message thus consists of a status byte — which channel, what pitch — followed by two data bytes. It is followed by a Note Off message (key release), which also has a pitch (which note to turn off) and— for consistency, one supposes —- a velocity (often set to zero and ignored).

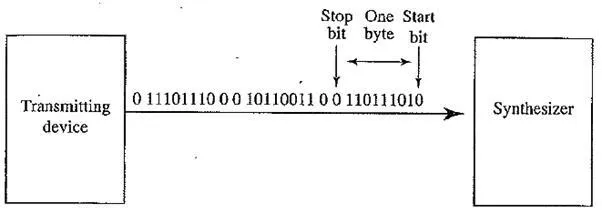

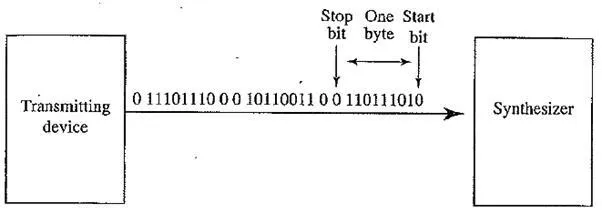

Stream – of 10 – bit bytes; for typical MIDI messages, these consist of {status byte, data byte, data byte) = (Note On, Note Number, Note Velocity}

The data in a MIDI status byte is between 128 and 255; each of the data bytes is between 0 and 127. Actual MIDI bytes are 8 bit, plus a 0 start and stop bit, making them 10 – bit “bytes”. The above figure shows the MIDI datastream.

A MIDI device often is capable of programmability, which means it has filters available for changing the bass and treble response and can also change the “envelope” describing how the amplitude of a sound changes over time. The following figure shows a model of a digital instrument’s response to Note On / Note Off messages.

MIDI sequencers (editors) allow you to work with standard music notation or get right into the data, if desired. MIDI files can also store wave – table data. The advantage of wave – table data (WAV files) is that it much more precisely stores the exact sound of an instrument. A sampler is used to sample the audio data — for example, a “drum machine” always stores wave – table data of real drums.

Sequencers employ several techniques for producing more music from what is actually available. For example, looping over (repeating) a few bars can be more or less convincing. Volume can be easily controlled over time — this is called time – varying amplitude modulation. More interestingly, sequencers can also accomplish time compression or expansion with no pitch change.

While it is possible to change the pitch of a sampled instrument, if the key change is large, the resulting sound begins to sound displeasing. For this reason, samplers employ multi – sampling. A sound is recorded using several band – pass filters, and the resulting recordings are assigned to different keyboard keys. This makes frequency shifting for a change of key more reliable, since less shift is involved for each note.

Stages of amplitude versus time for a music note

Hardware Aspects of MIDI

The MIDI hardware setup consists of a 31.25 kbps (kilobits per second) serial connection, with the 10 – bit bytes including a 0 start and stop bit. Usually, MIDI – capable units are either input devices or output devices, not both.

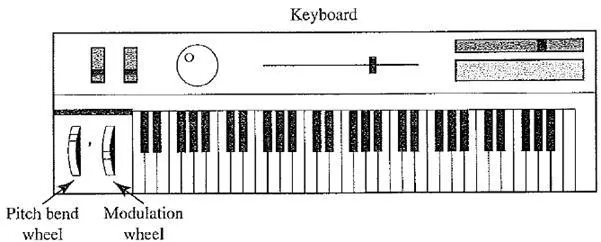

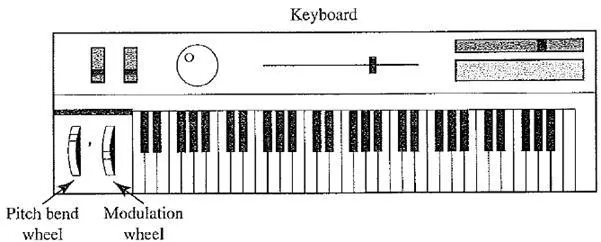

The following figure shows a traditional synthesizer. The modulation wheel adds vibrato. Pitch bend alters the frequency, much like pulling a guitar string over slightly. There are often other controls, such as foots pedals, sliders, and so on.

The physical MIDI ports consist of 5 – pin connectors labeled IN and OUT and a third connector, THRU. This last data channel simply copies data entering the IN channel. MIDI communication is half – duplex. MIDI IN is the connector via which the device receives all MIDI data. MIDI OUT is the connector through which the device transmits all the MIDI data it generates itself. MIDI THRU is the connector by which the device echoes the data it receives from MIDI IN (and only that -— all the data generated by the device itself is sent via MIDI OUT). These ports are on the sound card or interface externally, either on a separate card on a PC expansion card slot or using a special interface to a serial or parallel port.

A MIDI Synthesizer

A typical MIDI setup

The above figure shows a typical MIDI sequencer setup. Here, the MIDI OUT of the keyboard is connected to the MIDI IN of a synthesizer and then THRU to each of the additional sound modules. During recording, a keyboard – equipped synthesizer sends MIDI messages to a sequencer, which records them. During playback, messages are sent from the sequencer to all the sound modules and the synthesizer, which play the music.

Structure of MIDI Messages

MIDI messages can be classified into two types, as in the following figure — channel messages and system messages — and further classified as shown. Each type of message will be examined below.

MIDI message taxonomy

MIDI voice messages

Channel Messages. A channel message can have up to 3 bytes; the first is the status byte (the opcode, as it were), and has its most significant bit set to 1. The four low – order bits identify which of the 16 possible channels this message belongs to, with the three remaining bits holding the message. For a data byte, the most significant bit is set to zero.

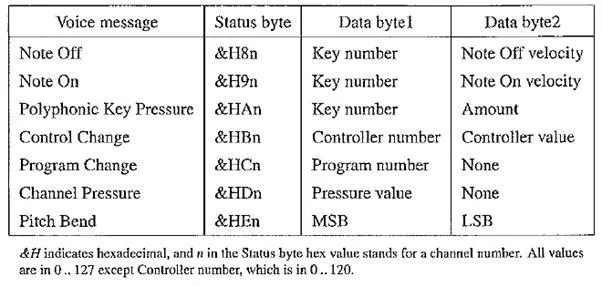

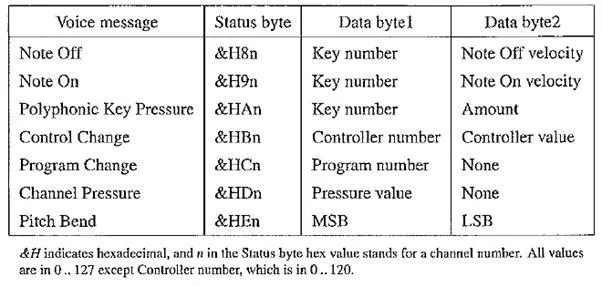

Voice Messages. This type of channel message controls a voice — that is, sends information specifying which note to play or to turn off — and encodes key pressure. Voice messages are also used to specify controller effects, such as sustain, vibrato, tremolo, and the pitch wheel. The above table lists these operations.

For Note On and Note Off messages, the velocity is how quickly the key is played. Typically, a synthesizer responds to a higher velocity by making the note louder or brighter. Note On makes a note occur, and the synthesizer also attempts to make the note sound like the real instrument while the note is playing. Pressure messages can be used to alter the sound of notes while they are playing. The Channel Pressure message is a force measure for the keys on a specific channel (instrument) and has an identical effect on all notes playing on that channel. The other pressure message, Polyphonic Key Pressure (also called Key Pressure), specifies how much volume keys played together are to have and can be different for each note in a chord. Pressure is also called aftertouch.

The Control Change instruction sets various controllers (faders, vibrato, etc.). Each manufacturer may make use of different controller numbers for different tasks. However, controller 1 is likely the modulation wheel (for vibrato).

For example, a Note On message is followed by two bytes, one to identify the note and one to specify the velocity. Therefore, to play note number 80 with maximum velocity on channel 13, the MIDI device would send the following three hex byte values: &H9C &H50 &H7F.. Notes are numbered such that middle C has number 60.

To play two notes simultaneously (effectively), first we would send a Program Change message for each of two channels. Recall that Program Change means to load a particular patch for that channel. So far, we have attached two timbres to two different channels. Then sending two Note On messages (in serial) would turn on both channels.

Alternatively, we could also send a Note On message for a particular channel and then another Note On message, with another pitch, before sending the Note Off message for the first note. Then we would be playing two notes effectively at the same time on the same instrument.

Polyphonic Pressure refers to how much force simultaneous notes have on several instruments. Channel Pressure refers to how much force a single note has on one instrument.

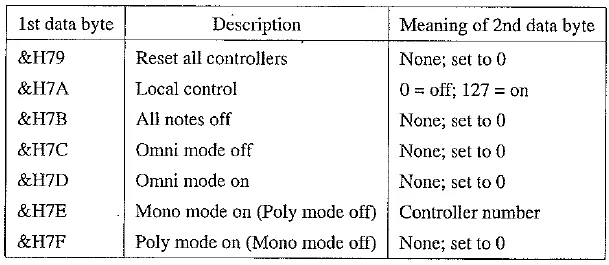

Channel Mode Messages, Channel mode messages form a special case of the Control Change message, and therefore all mode messages have opcode B (so the message is “&HBn,” or 1011nnnn). However, a Channel Mode message has its first data byte in 121 through 127 (&H79 – 7F).

MIDI mode messages

Channel mode messages determine how an instrument processes MIDI voice messages. Some examples include respond to all messages, respond just to the correct channel, don’t respond at all, or go over to local control of the instrument.

Recall that the status byte is “&HBn,” where n is the channel. The data bytes have meanings as shown in the above table. Local Control Off means that the keyboard should be disconnected from the synthesizer (and another, external, device will be used to control the sound). All Notes Off is a handy command, especially if, as sometimes happens, a bug arises such that a note is left playing inadvertently. Omni means that devices respond to messages from all channels. The usual mode is OMNI OFFpay attention to your own messages only, and do not respond to every message regardless of what channel it is on. Poly means a device will play back several notes at once if requested to do so. The usual mode is POLY ON.

In POLY OFF —- monophonic mode — the argument that represents the number of monophonic channels can have a value of zero, in which case it defaults to the number of voices the receiver can play; or it may set to a specific number of channels. However, the exact meaning of the combination of OMNI ON / OFF and Mono / Poly depends on the specific combination, with four possibilities. Suffice it to say that the usual combination is OMNI OFF, POLY ON.

MIDI System Commonmessages

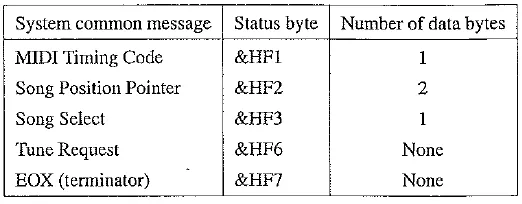

System Messages. System messages have no channel number and are meant for commands that are not channel – specific, such as timing signals for synchronization, positioning information in prerecorded MIDI sequences, and detailed setup information for the destination device. Opcodes for all system messages start with “&HF.” System messages are divided into three classifications, according to their use.

System Common Messages. The above table sets out these messages, which relate to timing or positioning. Song Position is measured in beats. The messages determine what is to be played upon receipt of a “start” real – time message (see below).

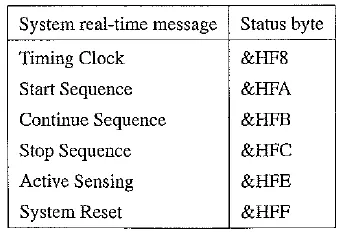

System Real – Time Messages. The following table sets out system real-time messages, which are related to synchronization.

System Exclusive Message. The final type of system message, System Exclusive messages, is included so that manufacturers can extend the MIDI standard. After the initial code, they can insert a stream of any specific messages that apply to their own product. A System Exclusive message is supposed to be terminated by a terminator byte “&HF7,” as specified in the above table. However, the terminator is optional, and the datastream may simply be ended by sending the status byte of the next message.

MIDI System Real – Time messages

General MIDI

‘For MIDI music to sound more or less the same on every machine, we would at least like to have the same patch numbers associated with the same instruments — for example, patch 1 should always be a piano, not a flugelhorn. To this end, General MIDI is a scheme for assigning instruments to patch numbers. A standard percussion map also specifies 47 percussion sounds. Where a “note” appears on a musical score determines just what percussion element is being struck. This book’s web site includes both the General MIDI Instrument Path Map and the Percussion Key map.

Other requirements for General MIDI compatibility are that a MIDI device must support all 16 channels; must be multi – timbral (i.e., each channel can play a different instrument / program); must be polyphonic (i.e., each channel is able to play many voices); and must have a minimum of 24 dynamically allocated voices.

General MIDI LeveI2. An extended General MIDI has recently been defined, with a standard SMF Standard MIDI File format defined. A nice extension is the inclusion of extra character information, such as karaoke lyrics, which can be displayed on a good sequencer.

MIDI – to – WAV Conversion

Some programs, such as early versions of Premiere, cannot include MIDI files — instead, they insist on WAV format files. Various shareware programs can approximate a reasonable conversion between these formats. The programs essentially consist of large lookup files that try to do a reasonable job of substituting predefined or shifted WAV output for some MIDI messages, with inconsistent success.