“It’s our latest DSP for embedded vision and AI, and has been built using a much faster processor architecture,” Desai explains.

“For any successful DSP solution, targeting vision and AI, it has to be embedded. Data has to be processed on the fly so applications that use them, need to be embedded within their own system. They also have to be power efficient and, as the use of neural networks grows, so the platform needs to be future-proofed.”

According to Desai, the Vision Q6 DSP has been designed to address those requirements.

“It’s our fifth-generation device and offers significantly better vision and AI performance than its predecessor, the Vision P6 DSP,” he declares. “It provides better power efficiency too, 1.25X that of its predecessor’s peak performance.”

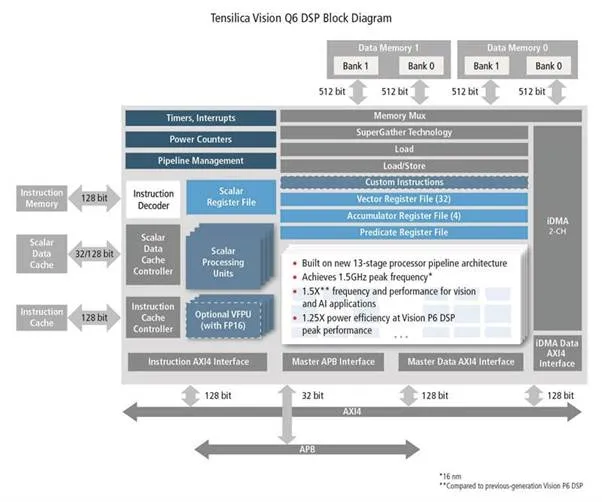

With a deeper, 13-stage processor pipeline and system architecture that has been designed for use with large local memories, the Vision Q6 is able to achieve 1.5GHz peak frequency and 1GHz typical frequency at 16nm – and can do this while using the same floor plan area as the Vision P6 DSP.

“Designers will be able to develop high-performance products that meet their vision and AI requirements while meeting more demanding power-efficiency needs,” Desai contends.

The Vision Q6 DSP comes with an enhanced DSP instruction set. According to Desai, this results in up to 20% fewer cycles than the Vision P6 DSP for embedded vision applications/kernels, such as Optical Flow; Transpose; and warpAffine, and other commonly used filters such as, Median and Sobel.

“With twice the system data bandwidth with separate master/slave AXI interfaces for data/instructions and multi-channel DMA, we have also been able to alleviate the memory bandwidth challenges in vision and AI applications. Not only that, but we have reduced latency and the other overheads associated with task switching and DMA setup,” Desai explains.

Desai also says that the Vision Q6 provides backwards compatibility with the Vision P6 DSP.

“Customers will be able to preserve their software investment for an easy migration,” he says.

While the Q6 offers a significant performance boost relative to the P6, it retains the programmability developers have said they need to support rapidly evolving neural network architectures.

“We have sought to build on the success of the Vision P5 and P6 DSPs which have been designed into a number of generations of mobile application processors,” Desai explains.

The Vision Q6 DSP supports AI applications developed in the Caffe, TensorFlow and TensorFlowLite frameworks through the Tensilica Xtensa Neural Network Compiler (XNNC).

The XNNC compiler can map neural networks into executable and highly optimised high-performance code for the Vision Q6 DSP, and does this by leveraging a comprehensive set of neural network library functions.

The Vision Q6 DSP also supports the Android Neural Network (ANN) API for on-device AI acceleration in Android-powered devices.

The software environment also features complete and optimised support for more than 1,500 OpenCV-based vision and OpenVX library functions, enabling much faster, high-level migration of existing vision applications.

“Cadence’s Vision DSPs are being adopted in a growing number of end-user applications,” says Frison Xu, marketing VP of ArcSoft – who have been working closely with Cadence to develop AI and vision-based applications and are part of Cadence’s vision and AI partner ecosystem.

“Features which include wide-vector SIMD processing, VLIW instructions, a large number of 8-bit and 16-bit MACs, and scatter/gather intrinsics make these platforms suitable for demanding neural network and vision algorithms.”

The Vision Q6 DSP has been designed for embedded vision and on-device AI applications requiring performance ranging from 200 to 400 GMAC/sec. However, with its 384 GMAC/sec peak, it can provide even better levels of performance and, when paired with the Vision C5 DSP, it can deliver for applications requiring AI performance greater than 384 GMAC/sec.

It’s an exciting time for embedded vision and AI. With image processing requirements increasing and on device AI growing in complexity, while the underlying neural networks are evolving rapidly, the need for a DSP that’s capable of meeting these demands efficiently

is critical.